AI Outside In: Confidence Everywhere!

To make information on Artificial Intelligence more useful and accessible to everyone, from students to non-technical people curious about how AI works, we’ve teamed up with Google’s People + AI Research (PAIR) initiative, whose mission is to make partnerships between people and AI more productive, engaging, and fair.

Here is the first post in a three-part series — which we're calling "AI Outside In" — by PAIR Writer in Residence, independent tech writer and blogger David Weinberger. He offers his outside perspective on key developments in AI research and will explain central concepts in the field of machine learning. He’ll be looking at the technology within a broader context of social issues and ideas. His opinions are his own and do not necessarily reflect those of Google.

Machines can learn, but what do they know?

Machine learning systems may be portrayed in much of the media as our new overlords, dictating policy while blithely driving our cars for us, but these systems are actually quite humble. It may seem counterintuitive, but we could learn from their humility.

Machine learning works by inspecting the data we provide it, looking for relationships among the many small points of data, and connecting them into what it thinks is a working model that will let it categorize inputs or make predictions. To take a simple example, you could use the 202,000 images of celebrities in the CelebA database to train a machine learning system. Forty different aspects of those images have been labeled by patient humans: Is the face oval-shaped? Is the person wearing a hat? Is the person smiling? By analyzing all those pixels, the system will associate some patterns with those aspects.

For example, the system might have learned that faces labeled “smiling” often have a horizontal set of light pixels (which we humans would call “teeth”) in the bottom third of the image. But of course, the pixel patterns are far more complex than that, for not everyone shows teeth when smiling, and raised eyebrows can indicate a smile, especially in conjunction with other arrangements of facial pixels. Machine learning doesn’t know about teeth, eyebrows, or dimples that show up when someone grins. It just knows about the probability that some patterns of pixels are associated with the label “smiling.” And some of those patterns are more reliably associated with smiling than others.

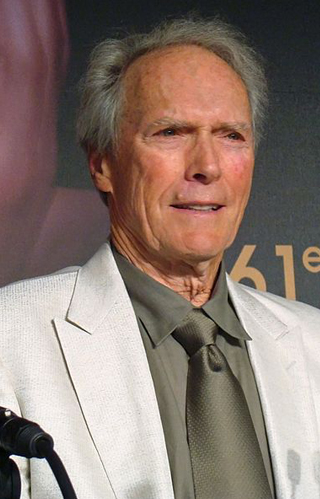

That means that the machine learning system doesn’t know anything, if, knowledge implies certitude, as it has at times in Western thought. Machine learning only yields probabilistic judgments: It’s likely to be more certain that a photo of Megan Markle smiling should go in the Smiling bucket than that a photo of Clint Eastwood at his happiest belongs there.

Creative Commons Attribution 2.0 Generic license.

Northern Ireland Office:

https://www.flickr.com/photos/niogovuk/41014635181/

Source: Wikimedia Commons

https://commons.wikimedia.org/wiki/File:Meghan_Markle_-_2018_(cropped).jpg

Creative Commons Attribution-Share Alike 1.0 Generic license.

Fanny Bouton:

https://www.flickr.com/photos/73783695@N00/

Source: Wikimedia Commons

https://commons.wikimedia.org/wiki/File:ClintEastwoodCannesMay08.jpg

Lacking confidence: a strength

We humans face the same type of decisions all the time because the world is analog and not neatly binary. For example, my wife has an upside down smile that is beloved in the family but probably not recognizable elsewhere.

The difference is, though, that the machine learning system will (or at least should) always report its conclusions in probabilistic terms, while we humans often tend to be more binary than that. We have a set of words we sometimes use to express the degree of confidence we have in what we’re saying, but we don’t always use them, and in some circumstances – politics, I’m looking at you – we rarely use them.

Worst of all, we humans often take expressing lack of confidence in what we’re saying as a sign of weakness. For machine learning, it’s a sign of strength.

In fact, it’s not just a strength. It’s a tool. Let’s say we’ve trained a system not on photos of celebrities but on scans of suspicious bodily growths, and we want the system to tell us whether a particular patient’s scan seems benign or malignant. This is a case where we’d rather include iffy tumors in the Malignant bucket, because missing a malignancy (a false negative) is more consequential for the patient than flagging a benign tumor for further inspection (a false positive).

We can control the machine’s behavior by changing the confidence level we want the system to use when sorting scans into malignant and benign piles. If we tell it to put a scan into the malignant pile only if it’s 99% sure, then it will miss a lot of malignant tumors. If we instead tell it to count as malignant any growth that it even 1% thinks might be malignant, it will catch almost all of the malignancies, but lots of patients will get alarming news and will undergo further tests that will turn out to have been unnecessary. We can use the degree of confidence in order to get that balance right.

Who's training whom?

There is no doubt that machine learning is going to become the engine behind an increasing number of applications we use every day. It’s already hard at work on our mobile phones, for example, where it powers the systems that predict the weather, choose the best way to navigate from A to B, and that guess the next word we’re going to type on that tiny little screen. In some circumstances, we are told the machine’s confidence level in its outputs, as when a weather app tells us that there’s a 20% chance of showers. The more that predictions and classifications come with confidence levels, the faster we humans will be trained that there is no shame in admitting that, like the AI we’re building, we don’t actually know that we are 100% right. We just have degrees of confidence.

For the sake of our ability to learn from one another and even just to discuss topics that we disagree about, this is one lesson that, to paraphrase The Simpsons, we can’t learn fast enough from our new, uncertain overlords.

Original illustration by Anna Young.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Contact Us

Stay in touch. We want to hear from you. Email us at Acceleratewithgoogle@google.com

Please note that this site and email address is not affiliated with any former Google program named Accelerator.